I’ve recently been working on a side project with my good friend Chris Lamb to scale up Google’s Deep Dream neural net visualization code to operate on giant (multi-hundred megapixel) images without crashing or taking an eternity. We recently got it working, and our digital artist friend (and fellow Plaxo alum!) Dan Ambrosi has since created some stunning work that’s honestly exceeded all of our expectations going in. I thought it would be useful to summarize why we did this and how we managed to get it working.

Even if you don’t care about the technical bits, I hope you’ll enjoy the fruits of our labor. 🙂

The ”˜danorama’ back story

Dan’s been experimenting for the past several years with computational techniques to create giant 2D-stitched HDR panoramas that, in his words, “better convey the feeling of a place and the way we really see it.” He collects a cubic array of high-resolution photos (multiple views wide by multiple views high by several exposures deep). He then uses three different software packages to produce a single seamless monster image (typically 15-25k pixels wide): Photomatix to blend the multiple exposures, PTGui to stitch together the individual views, and Photoshop to crop and sweeten the final image. The results are (IMO) quite compelling, especially when viewed backlit and “life size” at scales of 8’ wide and beyond (as you can do e.g. in the lobby of the Coastside Adult Day Health Center in Half Moon Bay, CA).

Dan’s been experimenting for the past several years with computational techniques to create giant 2D-stitched HDR panoramas that, in his words, “better convey the feeling of a place and the way we really see it.” He collects a cubic array of high-resolution photos (multiple views wide by multiple views high by several exposures deep). He then uses three different software packages to produce a single seamless monster image (typically 15-25k pixels wide): Photomatix to blend the multiple exposures, PTGui to stitch together the individual views, and Photoshop to crop and sweeten the final image. The results are (IMO) quite compelling, especially when viewed backlit and “life size” at scales of 8’ wide and beyond (as you can do e.g. in the lobby of the Coastside Adult Day Health Center in Half Moon Bay, CA).

“I’d like to pick your brain about a little something”¦”

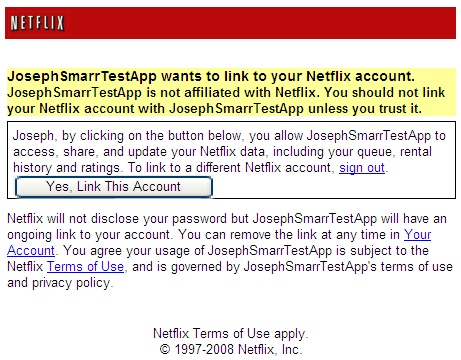

When Google first released its deep dream software and corresponding sample images, everyone went crazy. Mostly, the articles focused on how trippy (and often disturbing) the images it produced were, but Dan saw an opportunity to use it as a fourth tool in his existing computational pipeline–one that could potentially create captivating impressionistic details when viewed up close without distorting the overall gestalt of the landscape when viewed at a distance. After trying and failing to use the code (or any of the DIY sites set up to run the code on uploaded images) on his giant panoramas (each image usually around 250MB), he pinged me to ask if I might be able to get it working.

When Google first released its deep dream software and corresponding sample images, everyone went crazy. Mostly, the articles focused on how trippy (and often disturbing) the images it produced were, but Dan saw an opportunity to use it as a fourth tool in his existing computational pipeline–one that could potentially create captivating impressionistic details when viewed up close without distorting the overall gestalt of the landscape when viewed at a distance. After trying and failing to use the code (or any of the DIY sites set up to run the code on uploaded images) on his giant panoramas (each image usually around 250MB), he pinged me to ask if I might be able to get it working.

I had no particular familiarity with this code or scaling up graphics code in general, but it sounded like an interesting challenge, and when I asked around inside Google, people on the brain team suggested that, in theory, it should be possible. I asked Chris if he was interested in tackling this challenge with me (both because we’d been looking for a side project to hack on together and because of his expertise in CUDA, which the open source code could take advantage of to run the neural nets on NVIDIA GPUs), and we decided to give it a shot. We picked AWS EC2 as the target platform since it was an easy and inexpensive way to get a linux box with GPUs (sadly, no such instance types are currently offered by Google Compute Engine) that we could hand off to Dan if/when we got it working. Dan provided us with a sample giant panorama image, and off we set.

“We’re gonna need a bigger boat…”

Sure enough, while we could successfully dream on small images, as soon as we tried anything big, lots of bad things started happening. First, the image was too large to fit in the GPU’s directly attached memory, so it crashed. The neural nets are also trained to work on fixed-size 224×224 pixel images, so they had to downscale the images to fit, resulting in loss of detail. The solution to both problems (as suggested to me by the deep dream authors) was to iteratively select small sub-tiles of the image and dream on them separately before merging them back into the final image. By randomly picking the tile offsets each time and iterating for long enough, the whole image gets affected without obvious seams, yet each individual dreaming run is manageable.

Sure enough, while we could successfully dream on small images, as soon as we tried anything big, lots of bad things started happening. First, the image was too large to fit in the GPU’s directly attached memory, so it crashed. The neural nets are also trained to work on fixed-size 224×224 pixel images, so they had to downscale the images to fit, resulting in loss of detail. The solution to both problems (as suggested to me by the deep dream authors) was to iteratively select small sub-tiles of the image and dream on them separately before merging them back into the final image. By randomly picking the tile offsets each time and iterating for long enough, the whole image gets affected without obvious seams, yet each individual dreaming run is manageable.

Once we got that working, we thought we were home free, but we still couldn’t use the full size panoramas. The GPUs were fine now, but the computer itself would run out of RAM and crash. We thought this was odd since, as mentioned above, even the largest images were only around 250MB. But of course that’s compressed JPEG, and the standard Python Imaging Library (PIL) that’s used in this code first inflates the image into an uncompressed 2D array where each pixel is represented by 3×32 bits (one per color channel), so that the same image ends up needing 3.5GB (!) of RAM to represent. And then that giant image is copied several more times by the internal code, meaning even our beefiest instances were getting exhausted.

So we set about carefully profiling the memory usage of the code (and the libraries it uses like NumPy) and looking for opportunities to avoid any copying. We found the memory_profiler module especially helpful, as you can annotate any suspicious methods with @profile and then run python -m memory_profiler your_code.py to get a line-by-line dump of incremental memory allocation. We found lots of places where a bit of rejiggering could save a copy here or there, and eventually got it manageable enough to run reliably on EC2’s g2.8xlarge instances. There’s still more work we could do here (e.g. rewriting numpy.roll to operate in-place instead of copying), but we were satisfied that we could now get the large images to finish dreaming without crashing.

BTW, in case you had any doubts, running this code on NVIDIA GPUs is literally about 10x faster than CPU-only. You have to make sure caffe is compiled to take advantage of GPUs and tell it explicitly to use one during execution, but trust me, it’s well worth it.

Solving the “last mile” problem

With our proof-of-concept in hand, our final task was to package up this code in such a way that Dan could use it on his own. There are lots of tweakable parameters in the deep dream code (including which layer of the deep neural net you use to dream, how many iterations you run, how much you scale the image up and down in the process, and so on), and we knew Dan would have to experiment for a while to figure out what achieved the desired artistic effect. We started by building a simple django web UI to upload images, select one for dreaming, set the parameters, and download the result. The Material Design Lite library made it easy to produce reasonably polished looking UI without spending much time on it. But given how long the full images took to produce (often 8-10 hours, executing a total of 70-90 quadrillion (!) floating point operations in the process), we knew we’d like to include a progress bar and enable Dan to kick off multiple jobs in parallel.

With our proof-of-concept in hand, our final task was to package up this code in such a way that Dan could use it on his own. There are lots of tweakable parameters in the deep dream code (including which layer of the deep neural net you use to dream, how many iterations you run, how much you scale the image up and down in the process, and so on), and we knew Dan would have to experiment for a while to figure out what achieved the desired artistic effect. We started by building a simple django web UI to upload images, select one for dreaming, set the parameters, and download the result. The Material Design Lite library made it easy to produce reasonably polished looking UI without spending much time on it. But given how long the full images took to produce (often 8-10 hours, executing a total of 70-90 quadrillion (!) floating point operations in the process), we knew we’d like to include a progress bar and enable Dan to kick off multiple jobs in parallel.

Chris took the lead here and set up celery to queue up dispatching and controlling asynchronous dreaming jobs routed to different GPUs. He also figured out how to multiply together all the various sub-steps of the algorithm to give an overall percentage complete. Once we started up the instance and the various servers, Dan could control the entire process on his own. We weren’t sure how robust it would be, but we handed it off to him and hoped for the best.

“You guys can’t believe the progress i’m making”

Once we handed off the running EC2 instance to Dan, we didn’t hear much for a while. But it turned out that was because he was literally spending all day and night playing with the tools and honing his process. He started on a Wednesday night, and by that Saturday night he messaged us to say, “You guys can’t believe the progress I’m making. I can hardly believe it myself. Everything is working beautifully. If things continue the way they are, by Monday morning I’m going to completely amaze you.” Given that we’d barely gotten the system working at all, and that we still really didn’t know whether it could produce truly interesting output or not, this was certainly a pleasant surprise. When we probed a bit further, we could feel how excited and energized he was (his exact words were, “I’m busting, Jerry, I’m busting!”). It was certainly gratifying given the many late nights we’d spent getting to this point. But we still didn’t really know what to expect.

Once we handed off the running EC2 instance to Dan, we didn’t hear much for a while. But it turned out that was because he was literally spending all day and night playing with the tools and honing his process. He started on a Wednesday night, and by that Saturday night he messaged us to say, “You guys can’t believe the progress I’m making. I can hardly believe it myself. Everything is working beautifully. If things continue the way they are, by Monday morning I’m going to completely amaze you.” Given that we’d barely gotten the system working at all, and that we still really didn’t know whether it could produce truly interesting output or not, this was certainly a pleasant surprise. When we probed a bit further, we could feel how excited and energized he was (his exact words were, “I’m busting, Jerry, I’m busting!”). It was certainly gratifying given the many late nights we’d spent getting to this point. But we still didn’t really know what to expect.

The following Monday, Dan unveiled a brand new gallery featuring a baker’s dozen of his biggest panoramic landscapes redone with our tool using a full range of parameter settings varying from abstract/impressionistic to literal/animalistic. He fittingly titled the collection “Dreamscapes”. For each image, he shows a zoomed-out version of the whole landscape that, at first glance, appears totally normal (keep in mind the actual images are 10-15x larger in each dimension!). But then he shows a series of detail shots that look totally surreal. His idea is that these should be hung like giant paintings 8-16’ on a side. As you walk up to the image, you start noticing the surprising detail, much as you might examine the paint, brush strokes, and fine details on a giant painting. It’s still hard for me to believe that the details can be so wild and yet so invisible at even a modest distance. But as Dan says in his intro to the gallery, “we are all actively participating in a shared waking dream. Science shows us that our limited senses perceive a tiny fraction of the phenomena that comprise our world.” Indeed!

From dream to reality

While Dan is still constantly experimenting and tweaking his approach, the next obvious step is to print several of these works at full size to experience their true scale and detail. Since posting his gallery, he’s received interest from companies, conferences, art galleries, and individuals, so I’m hopeful we’ll soon be able to see our work “unleashed” in the physical world. With all the current excitement and anxiety around AI and what it means for society, his work seems to be striking a chord.

While Dan is still constantly experimenting and tweaking his approach, the next obvious step is to print several of these works at full size to experience their true scale and detail. Since posting his gallery, he’s received interest from companies, conferences, art galleries, and individuals, so I’m hopeful we’ll soon be able to see our work “unleashed” in the physical world. With all the current excitement and anxiety around AI and what it means for society, his work seems to be striking a chord.

Of course the confluence of art and science has always played an important role in helping us come to terms with the world and how we’re affecting it. When I started trying to hack on this project in my (copious!) spare time, I didn’t realize what lay ahead. But I find myself feeling an unusual sense of excitement and gratitude at having helped empower an artistic voice to be part of that conversation. So I guess my take-away here is to encourage you to (1) not be afraid to try and change or evolve open source software to meet a new need it wasn’t originally designed for, and (2) don’t underestimate the value in supporting creative as well as functional advances.

Dream on!

I

I