The Widgets Shall Inherit the Web

Widget Summit 2008

San Francisco, CA

November 4, 2008

Download PPT (7.1MB)

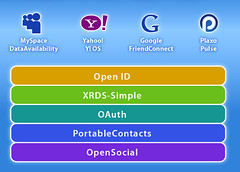

For the second year in a row, I gave a talk at Niall Kennedy‘s Widget Summit in San Francisco. My my, what a difference a year makes! Last year, I was still talking about high-performance JavaScript, and while I’d started working on opening up the social web, the world was a very different place: no OpenSocial, no OAuth, no Portable Contacts, and OpenID was still at version 1.1, with very little mainstream support. Certainly, these technologies were not top-of-mind at a conference about developing web widgets.

For the second year in a row, I gave a talk at Niall Kennedy‘s Widget Summit in San Francisco. My my, what a difference a year makes! Last year, I was still talking about high-performance JavaScript, and while I’d started working on opening up the social web, the world was a very different place: no OpenSocial, no OAuth, no Portable Contacts, and OpenID was still at version 1.1, with very little mainstream support. Certainly, these technologies were not top-of-mind at a conference about developing web widgets.

But this year, the Open Stack was on everybody’s mtheind–starting with Cody Simms’s keynote on Yahoo’s Open Strategy, and following with talks from Google, hi5, and MySpace, all about how they’ve opened up their platforms using OpenSocial, OAuth, and the rest of the Open Stack. My talk was called “The Widgets Shall Inherit the Web”, and it explained how these open building blocks will greatly expand the abilities of widget developers to add value not just inside existing social networks, but across the entire web. John McCrea live-blogged my talk, as well as the follow-on talk from Max Engel of MySpace.

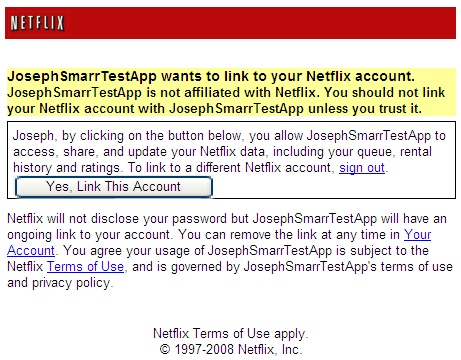

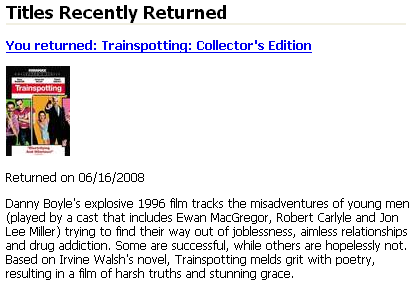

Most of the slides themselves came from my recent talk at Web 2.0 Expo NY, but when adapting my speech to this audience, something struck me: widget developers have actually been ahead of their time, and they’re in the best position of anyone to quickly take advantage of the opening up the social web. After all, widgets assume that someone else is taking care of signing up users, getting them to fill out profiles and find their friends, and sharing activity with one another. Widgets live on top of that existing ecosystem and add value by doing something new and unique. And importantly, it’s a symbiotic relationship–the widget developers can focus on their unique value-add (instead of having to build everything from scratch), and the container sites get additional rich functionality they didn’t have to build themselves.

Most of the slides themselves came from my recent talk at Web 2.0 Expo NY, but when adapting my speech to this audience, something struck me: widget developers have actually been ahead of their time, and they’re in the best position of anyone to quickly take advantage of the opening up the social web. After all, widgets assume that someone else is taking care of signing up users, getting them to fill out profiles and find their friends, and sharing activity with one another. Widgets live on top of that existing ecosystem and add value by doing something new and unique. And importantly, it’s a symbiotic relationship–the widget developers can focus on their unique value-add (instead of having to build everything from scratch), and the container sites get additional rich functionality they didn’t have to build themselves.

This is the exactly the virtous cycle that the Open Stack will deliver for the social web, and so to this audience, it was music to their ears.

PS: Yes, I still voted on the same day I gave this talk. I went to the polls first thing in the morning, but I waited in line for over 90 minutes (!), so I missed some of the opening talks. Luckily my talk wasn’t until the afternoon. And of course, it was well worth the wait! 🙂

Joshua Allen

Joshua Allen